Create MATLAB Reinforcement Learning Environments

In a reinforcement learning scenario, where you train an agent to complete a task, the environment models the external system (that is the world) with which the agent interacts. In control systems applications, this external system is often referred to as the plant.

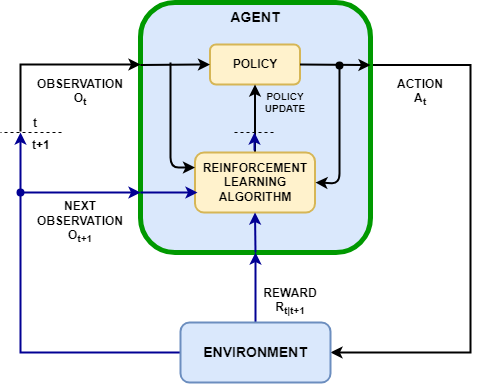

As shown in the following figure, the environment:

Receives actions from the agent.

Returns observations in response to the actions.

Generates a reward measuring how well the action contributes to achieving the task.

Creating an environment model involves defining:

Action and observation signals that the agent uses to interact with the environment.

A reward signal that the agent uses to measure its success. For more information, see Define Reward Signals.

The environment initial condition and its dynamic behavior.

Action and Observation Signals

When you create the environment object, you must specify the action and observation

signals that the agent uses to interact with the environment. You can create both discrete

and continuous action and observation spaces. For more information, see rlNumericSpec and

rlFiniteSetSpec,

respectively.

What signals you select as actions and observations depends on your application. For example, for control system applications, the integrals (and sometimes derivatives) of error signals are often useful observations. Also, for reference-tracking applications, having a time-varying reference signal as an observation is helpful.

When you define your observation signals, ensure that all the environment states (or their estimation) are included in the observation vector. This is a good practice because the agent is often a static function which lacks internal memory or state, and so it might not be able to successfully reconstruct the environment state internally.

For example, an image observation of a swinging pendulum has position information but does not have enough information, by itself, to determine the pendulum velocity. In this case, you can measure or estimate the pendulum velocity as an additional entry in the observation vector.

Predefined MATLAB Environments

The Reinforcement Learning Toolbox™ software provides some predefined MATLAB® environments for which the actions, observations, rewards, and dynamics are already defined. You can use these environments to:

Learn reinforcement learning concepts.

Gain familiarity with Reinforcement Learning Toolbox software features.

Test your own reinforcement learning agents.

For more information, see Load Predefined Grid World Environments and Load Predefined Control System Environments.

Custom MATLAB Environments

You can create the following types of custom MATLAB environments for your own applications.

Grid worlds with specified size, rewards, and obstacles

Environments with dynamics specified using custom functions

Environments specified by creating and modifying a template environment object

Once you create a custom environment object, you can train an agent in the same manner as in a predefined environment. For more information on training agents, see Train Reinforcement Learning Agents.

Custom Grid Worlds

You can create custom grid worlds of any size with your own custom reward, state transition, and obstacle configurations. To create a custom grid world environment:

Create a grid world model using the

createGridWorldfunction. For example, create a grid world namedgwwith ten rows and nine columns.gw = createGridWorld(10,9);

Configure the grid world by modifying the properties of the model. For example, specify the terminal state as the location

[7,9]gw.TerminalStates = "[7,9]";A grid world needs to be included in a Markov decision process (MDP) environment. Create an MDP environment for this grid world, which the agent uses to interact with the grid world model.

env = rlMDPEnv(gw);

For more information on custom grid worlds, see Create Custom Grid World Environments.

Specify Custom Functions

For simple environments, you can define a custom environment object by creating an

rlFunctionEnv

object and specifying your own custom reset and

step functions.

At the beginning of each training episode, the agent calls the reset function to set the environment initial condition. For example, you can specify known initial state values or place the environment into a random initial state.

The step function defines the dynamics of the environment, that is, how the state changes as a function of the current state and the agent action. At each training time step, the state of the model is updated using the step function.

For more information, see Create MATLAB Environment Using Custom Functions.

Create and Modify Template Environment

For more complex environments, you can define a custom environment by creating and modifying a template environment. To create a custom environment:

Create an environment template class using the

rlCreateEnvTemplatefunction.Modify the template environment, specifying environment properties, required environment functions, and optional environment functions.

Validate your custom environment using

validateEnvironment.

For more information, see Create Custom MATLAB Environment from Template.