Pretrained Deep Neural Networks

You can take a pretrained image classification neural network that has already learned to extract powerful and informative features from natural images and use it as a starting point to learn a new task. The majority of the pretrained neural networks are trained on a subset of the ImageNet database [1], which is used in the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) [2]. These neural networks have been trained on more than a million images and can classify images into 1000 object categories, such as keyboard, coffee mug, pencil, and many animals. Using a pretrained neural network with transfer learning is typically much faster and easier than training a neural network from scratch.

You can use previously trained neural networks for the following tasks:

| Purpose | Description |

|---|---|

| Classification | Apply pretrained neural networks directly to classification

problems. To classify a new image, use |

| Feature Extraction | Use a pretrained neural network as a feature extractor by using the layer activations as features. You can use these activations as features to train another machine learning model, such as a support vector machine (SVM). For more information, see Feature Extraction. For an example, see Extract Image Features Using Pretrained Network. |

| Transfer Learning | Take layers from a neural network trained on a large data set and fine-tune on a new data set. For more information, see Transfer Learning. For a simple example, see Get Started with Transfer Learning. To try more pretrained neural networks, see Train Deep Learning Network to Classify New Images. |

Compare Pretrained Neural Networks

Pretrained neural networks have different characteristics that matter when choosing a neural network to apply to your problem. The most important characteristics are neural network accuracy, speed, and size. Choosing a neural network is generally a tradeoff between these characteristics. Use the plot below to compare the ImageNet validation accuracy with the time required to make a prediction using the neural network.

Tip

To get started with transfer learning, try choosing one of the faster neural networks, such as SqueezeNet or GoogLeNet. You can then iterate quickly and try out different settings such as data preprocessing steps and training options. Once you have a feeling of which settings work well, try a more accurate neural network such as Inception-v3 or a ResNet and see if that improves your results.

Note

The plot above only shows an indication of the relative speeds of the different neural networks. The exact prediction and training iteration times depend on the hardware and mini-batch size that you use.

A good neural network has a high accuracy and is fast. The plot displays the classification accuracy versus the prediction time when using a modern GPU (an NVIDIA® Tesla® P100) and a mini-batch size of 128. The prediction time is measured relative to the fastest neural network. The area of each marker is proportional to the size of the neural network on disk.

The classification accuracy on the ImageNet validation set is the most common way to measure the accuracy of neural networks trained on ImageNet. Neural networks that are accurate on ImageNet are also often accurate when you apply them to other natural image data sets using transfer learning or feature extraction. This generalization is possible because the neural networks have learned to extract powerful and informative features from natural images that generalize to other similar data sets. However, high accuracy on ImageNet does not always transfer directly to other tasks, so it is a good idea to try multiple neural networks.

If you want to perform prediction using constrained hardware or distribute neural networks over the Internet, then also consider the size of the neural network on disk and in memory.

Neural Network Accuracy

There are multiple ways to calculate the classification accuracy on the ImageNet validation set and different sources use different methods. Sometimes an ensemble of multiple models is used and sometimes each image is evaluated multiple times using multiple crops. Sometimes the top-5 accuracy instead of the standard (top-1) accuracy is quoted. Because of these differences, it is often not possible to directly compare the accuracies from different sources. The accuracies of pretrained neural networks in Deep Learning Toolbox™ are standard (top-1) accuracies using a single model and single central image crop.

Load Pretrained Neural Networks

To load the SqueezeNet neural network, type squeezenet at the

command line.

net = squeezenet;

For other neural networks, use functions such as googlenet to

get links to download pretrained neural networks from the Add-On Explorer.

The following table lists the available pretrained neural networks trained on ImageNet and some of their properties. The neural network depth is defined as the largest number of sequential convolutional or fully connected layers on a path from the input layer to the output layer. The inputs to all neural networks are RGB images.

| Neural Network | Depth | Size | Parameters (Millions) | Image Input Size |

|---|---|---|---|---|

squeezenet | 18 | 5.2 MB | 1.24 | 227-by-227 |

googlenet | 22 | 27 MB | 7.0 | 224-by-224 |

inceptionv3 | 48 | 89 MB | 23.9 | 299-by-299 |

densenet201 | 201 | 77 MB | 20.0 | 224-by-224 |

mobilenetv2 | 53 | 13 MB | 3.5 | 224-by-224 |

resnet18 | 18 | 44 MB | 11.7 | 224-by-224 |

resnet50 | 50 | 96 MB | 25.6 | 224-by-224 |

resnet101 | 101 | 167 MB | 44.6 | 224-by-224 |

xception | 71 | 85 MB | 22.9 | 299-by-299 |

inceptionresnetv2 | 164 | 209 MB | 55.9 | 299-by-299 |

shufflenet | 50 | 5.4 MB | 1.4 | 224-by-224 |

nasnetmobile | * | 20 MB | 5.3 | 224-by-224 |

nasnetlarge | * | 332 MB | 88.9 | 331-by-331 |

darknet19 | 19 | 78 MB | 20.8 | 256-by-256 |

darknet53 | 53 | 155 MB | 41.6 | 256-by-256 |

efficientnetb0 | 82 | 20 MB | 5.3 | 224-by-224 |

alexnet | 8 | 227 MB | 61.0 | 227-by-227 |

vgg16 | 16 | 515 MB | 138 | 224-by-224 |

vgg19 | 19 | 535 MB | 144 | 224-by-224 |

*The NASNet-Mobile and NASNet-Large neural networks do not consist of a linear sequence of modules.

GoogLeNet Trained on Places365

The standard GoogLeNet neural network is trained on the ImageNet data set but you

can also load a neural network trained on the Places365 data set [3]

[4]. The neural network

trained on Places365 classifies images into 365 different place categories, such as

field, park, runway, and lobby. To load a pretrained GoogLeNet neural network

trained on the Places365 data set, use

googlenet('Weights','places365'). When performing transfer

learning to perform a new task, the most common approach is to use neural networks

pretrained on ImageNet. If the new task is similar to classifying scenes, then using

the neural network trained on Places365 could give higher accuracies.

For information about pretrained neural networks suitable for audio tasks, see Pretrained Neural Networks for Audio Applications.

Visualize Pretrained Neural Networks

You can load and visualize pretrained neural networks using Deep Network Designer.

deepNetworkDesigner(squeezenet)

To view and edit layer properties, select a layer. Click the help icon next to the layer name for information on the layer properties.

Explore other pretrained neural networks in Deep Network Designer by clicking New.

If you need to download a neural network, pause on the desired neural network and click Install to open the Add-On Explorer.

Feature Extraction

Feature extraction is an easy and fast way to use the power of deep learning without

investing time and effort into training a full neural network. Because it only requires

a single pass over the training images, it is especially useful if you do not have a

GPU. You extract learned image features using a pretrained neural network, and then use

those features to train a classifier, such as a support vector machine using fitcsvm (Statistics and Machine Learning Toolbox).

Try feature extraction when your new data set is very small. Since you only train a simple classifier on the extracted features, training is fast. It is also unlikely that fine-tuning deeper layers of the neural network improves the accuracy since there is little data to learn from.

If your data is very similar to the original data, then the more specific features extracted deeper in the neural network are likely to be useful for the new task.

If your data is very different from the original data, then the features extracted deeper in the neural network might be less useful for your task. Try training the final classifier on more general features extracted from an earlier neural network layer. If the new data set is large, then you can also try training a neural network from scratch.

ResNets are often good feature extractors. For an example showing how to use a pretrained neural network for feature extraction, see Extract Image Features Using Pretrained Network.

Transfer Learning

You can fine-tune deeper layers in the neural network by training the neural network on your new data set with the pretrained neural network as a starting point. Fine-tuning a neural network with transfer learning is often faster and easier than constructing and training a new neural network. The neural network has already learned a rich set of image features, but when you fine-tune the neural network it can learn features specific to your new data set. If you have a very large data set, then transfer learning might not be faster than training from scratch.

Tip

Fine-tuning a neural network often gives the highest accuracy. For very small data sets (fewer than about 20 images per class), try feature extraction instead.

Fine-tuning a neural network is slower and requires more effort than simple feature extraction, but since the neural network can learn to extract a different set of features, the final neural network is often more accurate. Fine-tuning usually works better than feature extraction as long as the new data set is not very small, because then the neural network has data to learn new features from. For examples showing how to perform transfer learning, see Transfer Learning with Deep Network Designer and Train Deep Learning Network to Classify New Images.

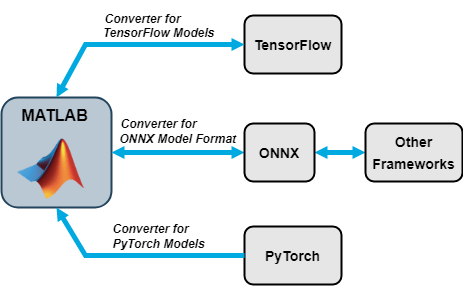

Import and Export Neural Networks

You can import neural networks and layer graphs from TensorFlow™ 2, TensorFlow-Keras, PyTorch®, and the ONNX™ (Open Neural Network Exchange) model format. You can also export Deep Learning Toolbox neural networks and layer graphs to TensorFlow 2 and the ONNX model format.

Import Functions

| External Deep Learning Platform and Model Format | Import Model as Neural Network | Import Model as Layer Graph |

|---|---|---|

TensorFlow neural network in SavedModel format | importTensorFlowNetwork | importTensorFlowLayers |

| TensorFlow-Keras neural network in HDF5 or JSON format | importKerasNetwork | importKerasLayers |

traced PyTorch model in .pt file | importNetworkFromPyTorch | Not applicable |

| Neural network in ONNX model format | importONNXNetwork | importONNXLayers |

The importTensorFlowNetwork and

importTensorFlowLayers functions are recommended over the

importKerasNetwork and importKerasLayers

functions. For more information, see Recommended Functions to Import TensorFlow Models.

The importTensorFlowNetwork,

importTensorFlowLayers,

importNetworkFromPyTorch, importONNXNetwork,

and importONNXLayers functions create automatically generated custom

layers when you import a model with TensorFlow layers, PyTorch layers, or ONNX operators that the functions cannot convert to built-in MATLAB® layers. The functions save the automatically generated custom layers to a

package in the current folder. For more information, see Autogenerated Custom Layers.

Export Functions

| Export Neural Network or Layer Graph | External Deep Learning Platform and Model Format |

|---|---|

exportNetworkToTensorFlow | TensorFlow 2 model in Python® package |

exportONNXNetwork | ONNX model format |

The exportNetworkToTensorFlow function saves a Deep Learning Toolbox neural network or layer graph as a TensorFlow model in a Python package. For more information on how to load the exported model and save it in

a standard TensorFlow format, see Load Exported TensorFlow Model and Save Exported TensorFlow Model in Standard Format.

By using ONNX as an intermediate format, you can interoperate with other deep learning frameworks that support ONNX model export or import.

Pretrained Neural Networks for Audio Applications

Audio Toolbox™ provides the pretrained VGGish, YAMNet, OpenL3, and CREPE neural networks.

Use the vggish (Audio Toolbox),

yamnet (Audio Toolbox), openl3 (Audio Toolbox),

and crepe (Audio Toolbox)

functions in MATLAB or the VGGish (Audio Toolbox) and

YAMNet (Audio Toolbox) blocks

in Simulink® to interact directly with the pretrained neural networks. You can also

import and visualize audio pretrained neural networks using Deep Network

Designer.

The following table lists the available pretrained audio neural networks and some of their properties.

| Neural Network | Depth | Size | Parameters (Millions) | Input Size |

|---|---|---|---|---|

crepe (Audio Toolbox) | 7 | 89.1 MB | 22.2 | 1024-by-1-by-1 |

openl3 (Audio Toolbox) | 8 | 18.8 MB | 4.68 | 128-by-199-by-1 |

vggish (Audio Toolbox) | 9 | 289 MB | 72.1 | 96-by-64-by-1 |

yamnet (Audio Toolbox) | 28 | 15.5 MB | 3.75 | 96-by-64-by-1 |

Use VGGish and YAMNet to perform transfer learning and feature extraction. Extract

VGGish or OpenL3 feature embeddings to input to machine learning and deep learning

systems. The classifySound (Audio Toolbox) function and the Sound

Classifier (Audio Toolbox) block use YAMNet to locate and classify sounds into one of 521

categories. The pitchnn (Audio Toolbox)

function uses CREPE to perform deep learning pitch estimation.

For examples showing how to adapt pretrained audio neural networks for a new task, see Transfer Learning with Pretrained Audio Networks (Audio Toolbox) and Transfer Learning with Pretrained Audio Networks in Deep Network Designer.

For more information on using deep learning for audio applications, see Deep Learning for Audio Applications (Audio Toolbox).

Pretrained Models on GitHub

To find the latest pretrained models, see MATLAB Deep Learning Model Hub.

For example:

For transformer models, such as GPT-2, BERT, and FinBERT, see the Transformer Models for MATLAB GitHub® repository.

For a pretrained EfficientDet-D0 object detection model, see the Pretrained EfficientDet Network For Object Detection GitHub repository.

References

[1] ImageNet. http://www.image-net.org

[2] Russakovsky, O., Deng, J., Su, H., et al. “ImageNet Large Scale Visual Recognition Challenge.” International Journal of Computer Vision (IJCV). Vol 115, Issue 3, 2015, pp. 211–252

[3] Zhou, Bolei, Aditya Khosla, Agata Lapedriza, Antonio Torralba, and Aude Oliva. "Places: An image database for deep scene understanding." arXiv preprint arXiv:1610.02055 (2016).

[4] Places. http://places2.csail.mit.edu/

See Also

alexnet | googlenet | inceptionv3 | densenet201 | darknet19 | darknet53 | resnet18 | resnet50 | resnet101 | vgg16 | vgg19 | shufflenet | nasnetmobile | nasnetlarge | mobilenetv2 | xception | inceptionresnetv2 | squeezenet | importTensorFlowNetwork | importTensorFlowLayers | importNetworkFromPyTorch | importONNXNetwork | importONNXLayers | exportNetworkToTensorFlow | exportONNXNetwork | Deep Network

Designer

Related Topics

- Deep Learning in MATLAB

- Transfer Learning with Deep Network Designer

- Extract Image Features Using Pretrained Network

- Classify Image Using GoogLeNet

- Train Deep Learning Network to Classify New Images

- Visualize Features of a Convolutional Neural Network

- Visualize Activations of a Convolutional Neural Network

- Deep Dream Images Using GoogLeNet