GPU Coder™ generates optimized CUDA® code from MATLAB® code and Simulink® models. The generated code includes CUDA kernels for parallelizable parts of your deep learning, embedded vision, and signal processing algorithms. For high performance, the generated code calls optimized NVIDIA® CUDA libraries, including TensorRT™, cuDNN, cuFFT, cuSolver, and cuBLAS. The code can be integrated into your project as source code, static libraries, or dynamic libraries, and it can be compiled for desktops, servers, and GPUs embedded on NVIDIA Jetson™, NVIDIA DRIVE™, and other platforms. You can use the generated CUDA within MATLAB to accelerate deep learning networks and other computationally intensive portions of your algorithm. GPU Coder lets you incorporate handwritten CUDA code into your algorithms and into the generated code.

When used with Embedded Coder®, GPU Coder lets you verify the numerical behavior of the generated code via software-in-the-loop (SIL) and processor-in-the-loop (PIL) testing.

Get Started:

Deploy Algorithms Royalty-Free

Compile and run your generated code on popular NVIDIA GPUs, from desktop systems to data centers to embedded hardware. The generated code is royalty-free—deploy it in commercial applications to your customers at no charge.

GPU Coder Success Stories

Learn how engineers and scientists in a variety of industries use GPU Coder to generate CUDA code for their applications.

Generate Code from Supported Toolboxes and Functions

GPU Coder generates code from a broad range of MATLAB language features that design engineers use to develop algorithms as components of larger systems. This includes hundreds of operators and functions from MATLAB and companion toolboxes.

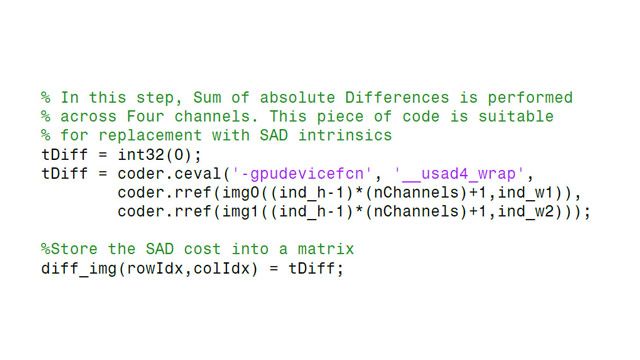

Incorporate Legacy Code

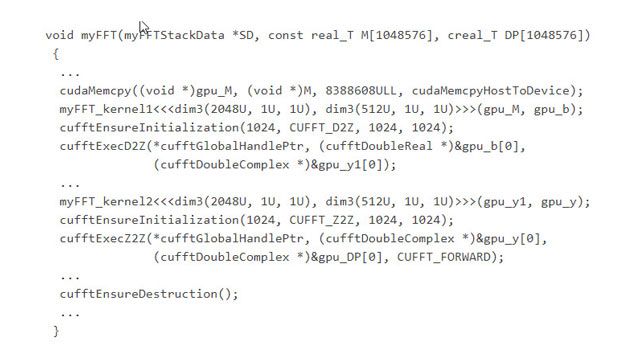

Use legacy code integration capabilities to incorporate trusted or highly optimized CUDA code into your MATLAB algorithms for testing in MATLAB. Then call the same CUDA code from the generated code as well.

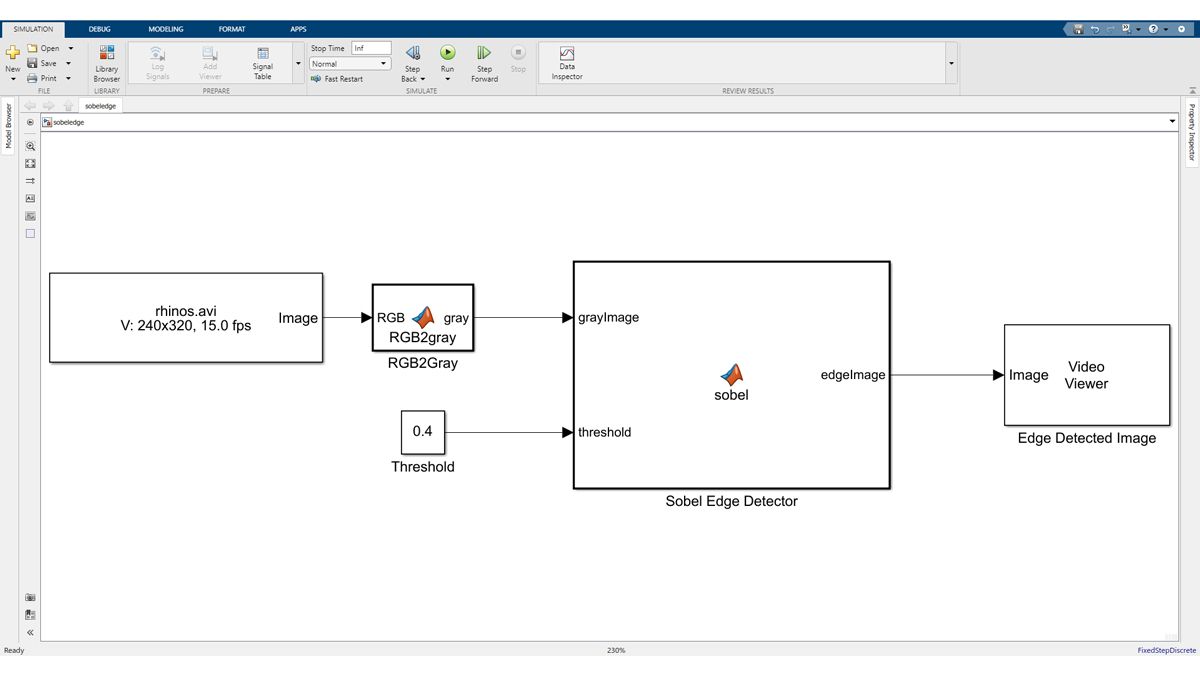

Run Simulations and Generate Optimized Code for NVIDIA GPUs

When used with Simulink Coder™, GPU Coder accelerates compute-intensive portions of MATLAB Function blocks in your Simulink models on NVIDIA GPUs. You can then generate optimized CUDA code from the Simulink model and deploy it to your NVIDIA GPU target.

Deploy End-to-End Deep Learning Algorithms

Use a variety of trained deep learning networks (including ResNet-50, SegNet, and LSTM) from Deep Learning Toolbox™ in your Simulink model and deploy to NVIDIA GPUs. Generate code for preprocessing and postprocessing along with your trained deep learning networks to deploy complete algorithms.

Log Signals, Tune Parameters, and Numerically Verify Code Behavior

When used with Simulink Coder, GPU Coder enables you to log signals and tune parameters in real time using external mode simulations. Use Embedded Coder with GPU Coder to run software-in-the-loop and processor-in-the-loop tests that numerically verify the generated code matches the behavior of the simulation.

Deploy End-to-End Deep Learning Algorithms

Deploy a variety of trained deep learning networks (including ResNet-50, SegNet, and LSTM) from Deep Learning Toolbox to NVIDIA GPUs. Use predefined deep learning layers or define custom layers for your specific application. Generate code for preprocessing and postprocessing along with your trained deep learning networks to deploy complete algorithms.

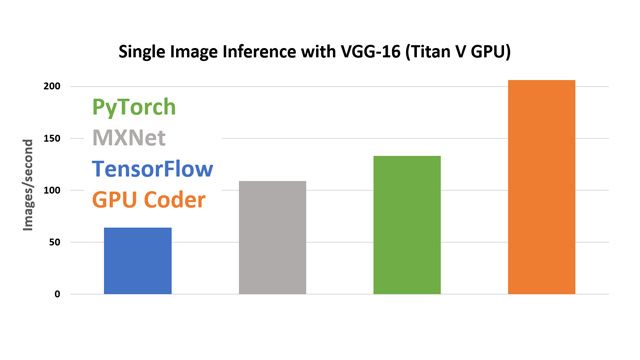

Generate Optimized Code for Inference

GPU Coder generates code with a smaller footprint compared with other deep learning solutions because it only generates the code needed to run inference with your specific algorithm. The generated code calls optimized libraries, including TensorRT and cuDNN.

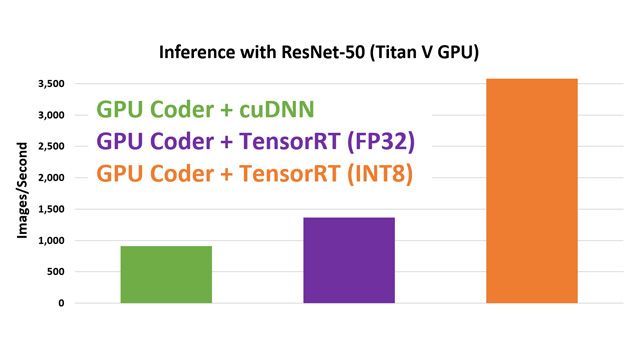

Optimize Further Using TensorRT

Generate code that integrates with NVIDIA TensorRT, a high-performance deep learning inference optimizer and runtime. Use INT8 or FP16 data types for an additional performance boost over the standard FP32 data type.

Deep Learning Quantization

Quantize your deep learning network to reduce memory usage and increase inference performance. Analyze and visualize the tradeoff between increased performance and inference accuracy using the Deep Network Quantizer app.

Minimize CPU-GPU Memory Transfers and Optimize Memory Usage

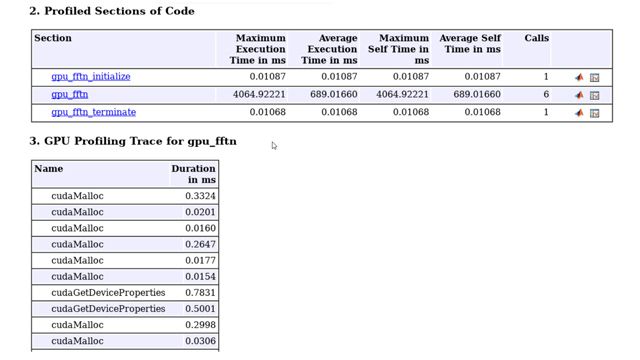

GPU Coder automatically analyzes, identifies, and partitions segments of MATLAB code to run on either the CPU or GPU. It also minimizes the number of data copies between CPU and GPU. Use profiling tools to identify other potential bottlenecks.

Invoke Optimized Libraries

Code generated with GPU Coder calls optimized NVIDIA CUDA libraries, including TensorRT, cuDNN, cuSolver, cuFFT, cuBLAS, and Thrust. Code generated from MATLAB toolbox functions are mapped to optimized libraries whenever possible.

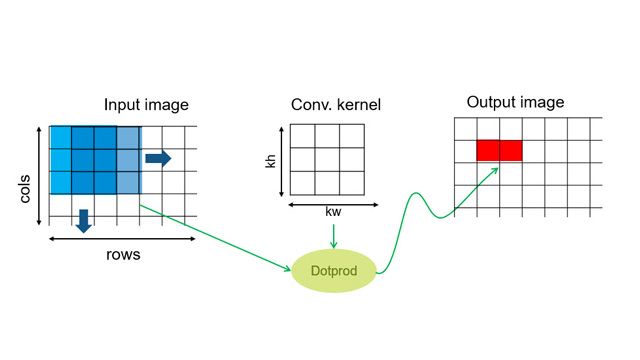

Use Design Patterns for Further Acceleration

Design patterns such as stencil processing use shared memory to improve memory bandwidth. They are applied automatically when using certain functions such as convolution. You can also manually invoke them using specific pragmas.

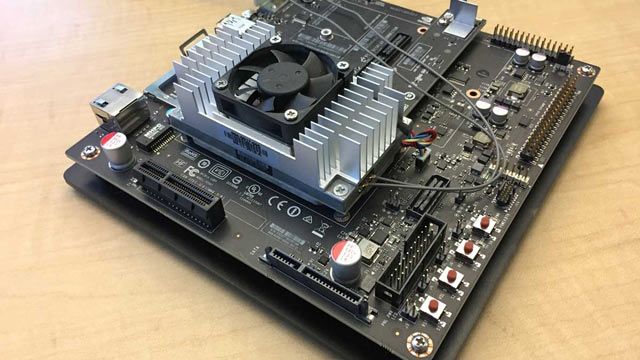

Prototype on NVIDIA Jetson and DRIVE Platforms

Automate cross-compilation and deployment of generated code onto NVIDIA Jetson and DRIVE platforms using GPU Coder Support Package for NVIDIA GPUs.

Access Peripherals and Sensors from MATLAB and Generated Code

Remotely communicate with the NVIDIA target from MATLAB to acquire data from webcams and other supported peripherals for early prototyping. Deploy your algorithm along with peripheral interface code to the board for standalone execution.

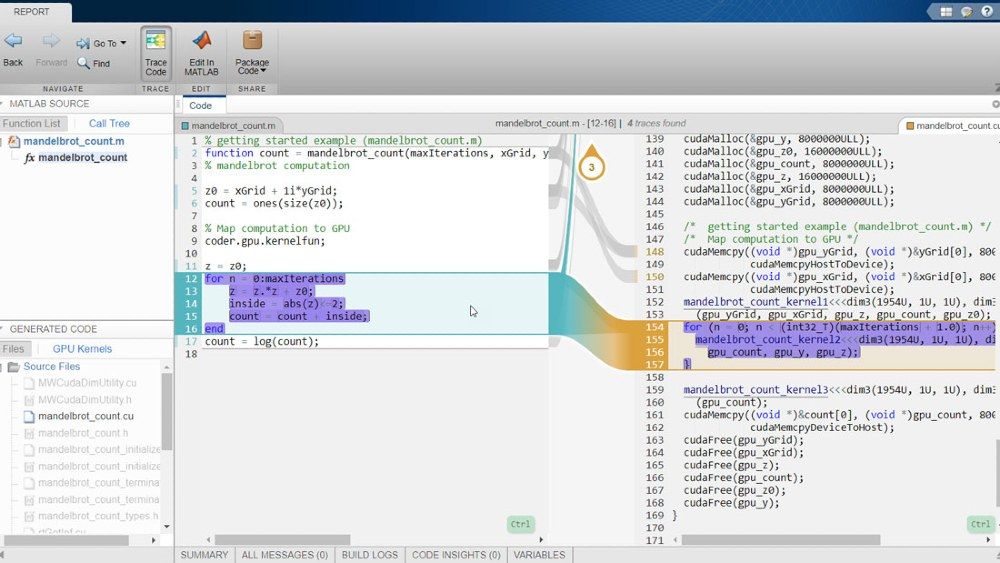

Move from Prototyping to Production

Use GPU Coder with Embedded Coder to interactively trace your MATLAB code side-by-side with the generated CUDA code. Verify the numerical behavior of the generated code running on the hardware using software-in-the-loop (SIL) and processor-in-the-loop (PIL) testing.

Accelerate Algorithms Using GPUs in MATLAB

Call generated CUDA code as a MEX function from your MATLAB code to speed execution, though performance will vary depending on the nature of your MATLAB code. Profile generated MEX functions to identify bottlenecks and focus your optimization efforts.

Accelerate Simulink Simulations Using NVIDIA GPUs

When used with Simulink Coder, GPU Coder accelerates compute-intensive portions of MATLAB Function blocks in your Simulink models on NVIDIA GPUs.